Benchmarking Cast

This test is over a year old.

Recent less detailed tests were able to serve 4269 listeners on an Intel Atom. This was around 2 gigabit per second (more than most servers have network cards for)

Performance has always been an important part of our mission. We benchmarked Cast for up to 2500+ listeners at the same time. The benchmark has been done with a single server with 1 CPU core of 3.7 GHz and 2GB of RAM. To spread the network load, we set up 3 clients in 3 different locations. You can find the results here in the graph.

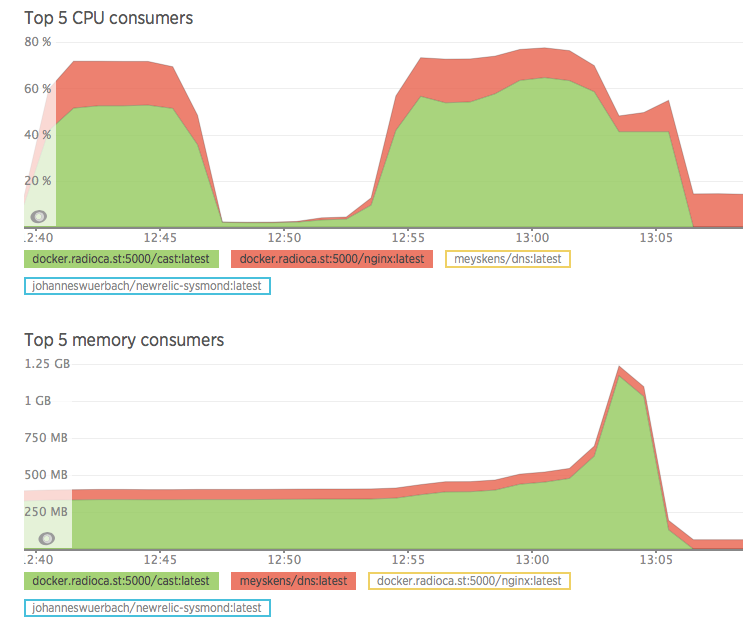

The CPU and Memory on our test node during benchmark.

Cast's CPU usage increases linearly during the first 500 to 1000 listeners. After the number of listeners reaches 1,000, the CPU usage stabilises even though listeners continue to increase.

After about 2,000 listeners, we see a huge drop in CPU usage but memory usage increases.

Compared to SHOUTcast and Icecast, Cast might not seem resource friendly, but it is able to continue serving new clients and APIs at full speed, thanks to the asynchronous character of Node.js; Icecast and SHOUTcast would take some time to load the status page when faced with this load.

The script

We want to thank the team at Icecast for sharing the following benchmark script. We took this script and added a cleanup routine to kill all curl processes after stopping the script.

#!/bin/sh

#

# run concurrent curls which download from URL to /dev/null. output total

# and average counts to results directory.

#

# max concurrent curls to kick off

max=500

# how long to stay connected (in seconds)

duration=99999999

# how long to sleep between each curl, can be decimal 0.5

delay=1

# url to request from

URL=http://opencast.radioca.st/streams/128kbps

cleanup() {

echo "SIGINT received, killing all curl"

killall --wait curl

exit

}

trap cleanup INT

#####

#mkdir -p results

echo > results

while /bin/true

do

count=1

while [ $count -le $max ]

do

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

curl -o /dev/null -m $duration -s -w "bytes %{size_download} avg %{speed_download} " "$URL" >> results &

[ "$delay" != "" ] && sleep $delay

count=$(($count+10))

done

wait

done

echo "done"

Note from the team

Even though this looks pretty good, we're never happy when it comes to performance. In the next releases we'll work in improving the handling of the streams to even lower the CPU and memory usage.

Cast will continue to improve.

Updated less than a minute ago